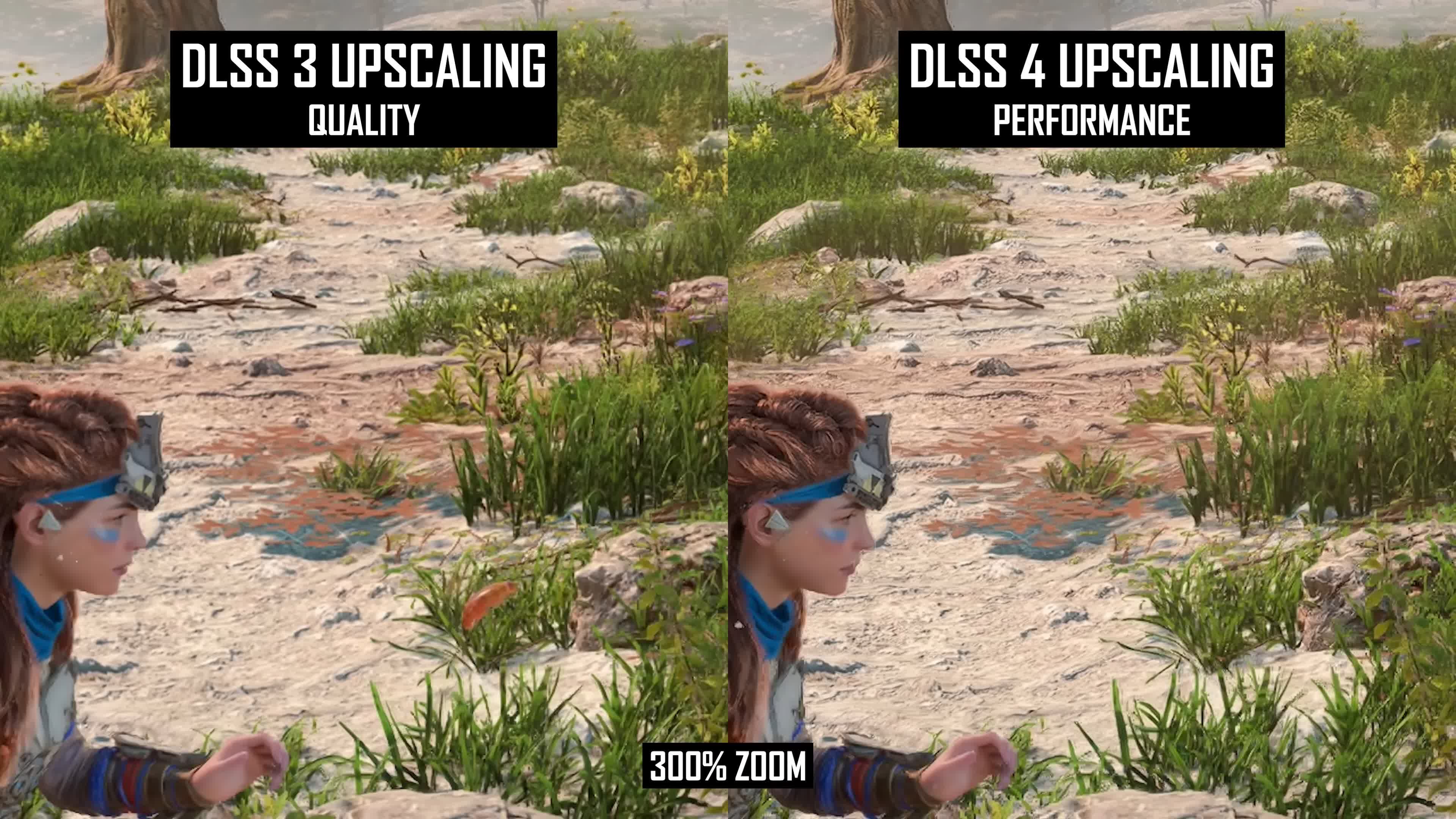

The most interesting side of Nvidia's new RTX 50 series is not the GPUs themselves, not even close, it's DLSS 4 upscaling that steals the spotlight with a new AI model and much improved image quality.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

DLSS 4 Upscaling at 4K is Actually Pretty Amazing

- Thread starter Scorpus

- Start date

0-4% of ROPs died to make this article possible.

I don't care. It's behind a massive paywall of the company that lost interest in It's former core consumers - gamers.

AMD, Intel, or in the future - Moore Threads.

Reporting on nVidia in any positive way, when They spit in Your face with Their recent pricing and laziness blunders is becoming a shilling.

AMD, Intel, or in the future - Moore Threads.

Reporting on nVidia in any positive way, when They spit in Your face with Their recent pricing and laziness blunders is becoming a shilling.

I don't care. It's behind a massive paywall of the company that lost interest in It's former core consumers - gamers.

AMD, Intel, or in the future - Moore Threads.

Reporting on nVidia in any positive way, when They spit in Your face with Their recent pricing and laziness blunders is becoming a shilling.

While I agree with your frustration, Nvidias core customers has not been gamers since 2006. Are they spitting in "our" faces? meh you could say that depending on your stance, however, "gamers" is not what Nvidia is focused on, and hasn't been for a long time.

https://www.cio.com/article/646471/how-nvidia-became-a-trillion-dollar-company.html

godrilla

Posts: 1,732 +1,175

Maybe they cought the Intel bug? Questionable the long term use of these. If these were affected byFirst time I see Nvidia being praise here

But then the current 50 series is plagued with launch problems now

the TSMC' earthquake. Wondering if TSMC is equally to blame here. One monopoly is scared of retaliation from another monopoly to state something public.

Update the rumors of oversupply is making more sense now. People are losing interest daily.

godrilla

Posts: 1,732 +1,175

It's true but why not exit gaming and go all in on ai unless their is internal conflict on the board. Someone on the board wants to paint gaming as not profitable or worth the risk or roi. Gamers are caught in the crossfire imo. This might solidify Nvidia going all in on ai from my guesstimate and Blackwell gaming is just a smoke screen to its competition look we are still focused on gaming sike!While I agree with your frustration, Nvidias core customers has not been gamers since 2006. Are they spitting in "our" faces? meh you could say that depending on your stance, however, "gamers" is not what Nvidia is focused on, and hasn't been for a long time.

https://www.cio.com/article/646471/how-nvidia-became-a-trillion-dollar-company.html

Thatsdisgusting

Posts: 270 +345

You’ve wrote

Yet I cant see a single instance in performance test. Typo?

Btw, regarding performance drop - did you try to force OFF ray reconstruction where it is possible? I found that in Cyberpunk RR drops perfomance, but not Transformer model itself; while totally the opposite Alan Wake traditional denoising instrumentsm are so inefficient, making RR a good compromise.

like DLSS 3 DLAA, which outperforms DLSS 4 Quality mode.

Yet I cant see a single instance in performance test. Typo?

Btw, regarding performance drop - did you try to force OFF ray reconstruction where it is possible? I found that in Cyberpunk RR drops perfomance, but not Transformer model itself; while totally the opposite Alan Wake traditional denoising instrumentsm are so inefficient, making RR a good compromise.

m3tavision

Posts: 1,700 +1,444

Upscaling is great for single-player games, that you can pause...

"For the DLSS 3 examples, each game was upgraded to the final version of DLSS 3 (3.8.10) using the DLSS Swapper utility."

Isn't this the latest version - NVIDIA DLSS DLL 310.2?

NVIDIA DLSS DLL

Isn't this the latest version - NVIDIA DLSS DLL 310.2?

NVIDIA DLSS DLL

Vulcanproject

Posts: 1,777 +3,404

It works well but not for every game. Forza Motorsport is still trash with DLSS, lots of ghosting. I can see this is another pretty significant step for most games. Especially ones now implementing it at official patch level rather than users forcing it with driver changes.

I was using it in both Cyberpunk and Indiana Jones the past few weeks and it is genuinely like finding another higher setting. Texture handling and motion is leagues better.

Balanced mode on DLSS 4 is easily better than DLSS 3 quality, without doubt. Even performance mode is superior in many cases.

I was using it in both Cyberpunk and Indiana Jones the past few weeks and it is genuinely like finding another higher setting. Texture handling and motion is leagues better.

Balanced mode on DLSS 4 is easily better than DLSS 3 quality, without doubt. Even performance mode is superior in many cases.

arrowflash

Posts: 631 +777

It works well but not for every game. Forza Motorsport is still trash with DLSS, lots of ghosting. I can see this is another pretty significant step for most games. Especially ones now implementing it at official patch level rather than users forcing it with driver changes.

I was using it in both Cyberpunk and Indiana Jones the past few weeks and it is genuinely like finding another higher setting. Texture handling and motion is leagues better.

Balanced mode on DLSS 4 is easily better than DLSS 3 quality, without doubt. Even performance mode is superior in many cases.

The only game where I have ever used DLSS is Cyberpunk, because it's one of those games with crap TAA implementation. Native resolution + TAA looks extremely blurry. With DLSS the game looks much sharper.

kira setsu

Posts: 859 +1,026

the real issue is that even when nvidia doesnt care the competition still cant beat them, or even get to the same level.It's true but why not exit gaming and go all in on ai unless their is internal conflict on the board. Someone on the board wants to paint gaming as not profitable or worth the risk or roi. Gamers are caught in the crossfire imo. This might solidify Nvidia going all in on ai from my guesstimate and Blackwell gaming is just a smoke screen to its competition look we are still focused on gaming sike!

"For the DLSS 3 examples, each game was upgraded to the final version of DLSS 3 (3.8.10) using the DLSS Swapper utility."

Isn't this the latest version - NVIDIA DLSS DLL 310.2?

NVIDIA DLSS DLL

The 300-series versions of DLSS are DLSS4.

Yeah I wouldn't have chosen that numbering convention either.

ZedRM

Posts: 2,644 +1,751

I think someones definition of "Amazing" needs revision. Even with all this info and these graphs, I'm not seeing "amazing" here. An improvement? Yes. An amazing improvement? No. -rolls eyes-

Eldrach

Posts: 284 +294

Dlss is pretty great - but it needs a good base resolution to upsample from. On a 1080p monitor it's next to useless. Better on a 1440p monitor - but still it's upsampling from a resolution close to 1080p. At 4k it's pretty great and I don't see any reason not to use it.

Last edited:

ChipBoundary

Posts: 107 +49

Dlss is pretty great - but it's a good base resolution to upsample from. On a 1080p monitor it's next to useless. Better on a 1440p monitor - but still it's upsampling from a resolution close to 1080p. At 4k it's pretty great and I don't see any reason not to use it.

It looks like crap, that's why. The resolution is irrelevant. It will never be viable because what it does is try to predict what the next frame is supposed to be. This will ALWAYS result in loss of quality and fidelity. Can it be improved? Yes, but that improvement is extremely finite and has a hard limit.

There is never a resolution or evolution of the technology where it won't look like a smeary mess and cause random glitches and artifacts to appear in your game. It is a waste of time and effort.

ChipBoundary

Posts: 107 +49

The only game where I have ever used DLSS is Cyberpunk, because it's one of those games with crap TAA implementation. Native resolution + TAA looks extremely blurry. With DLSS the game looks much sharper.

Not sure what game you're playing but at max settings with native resolution my Cyberpunk looks clean, crisp, and amazing. With DLSS it is a smeary mess.

ChipBoundary

Posts: 107 +49

So we can agree dlss 3 and earlier versions were garbage, yet fan boys were claiming it was better than native.

Literally no one was saying that. Also, it can NEVER be better than native because it is impossible to be. No matter how much Nvidia improves the technology it is impossible for it be anywhere near native or even usable. Anybody that uses any of these upscaling technologies is lying to everyone, including themselves.

Eldrach

Posts: 284 +294

DLSS is not just framegeneration - it’s also a great upsample tool. Interpolated frames causes next to no input delay. Dlss 1 was pretty crap - but after dlss 3 came out the quality has been very good. AI Framegeneration is a different discussion - But DLSS uses the current frame data and upsamples it based on previous frames. The better the sample, the better upscaled image it produces, which is why it’s best used at 4k as it renders from a 1440p+ sample - they’ve mixed in AA and other stuff as well now - so in many cases DLSS actually improves upon a native resolution.It looks like crap, that's why. The resolution is irrelevant. It will never be viable because what it does is try to predict what the next frame is supposed to be. This will ALWAYS result in loss of quality and fidelity. Can it be improved? Yes, but that improvement is extremely finite and has a hard limit.

There is never a resolution or evolution of the technology where it won't look like a smeary mess and cause random glitches and artifacts to appear in your game. It is a waste of time and effort.

A fully native 4k res with DLAA still looks better, but even with a 5090, your framerate will not be great in demanding games - you have to sacrifice raytracing quality and forget about path tracing in games like cyberpunk.

But from reading your comments you have decided to hate it - so I guess you are free to not use It if you don’t like it.

The smudging you mentioned is also fixed in DLSS 4.0

Last edited:

The nvidia fan boys were saying exactly that.Literally no one was saying that. Also, it can NEVER be better than native because it is impossible to be. No matter how much Nvidia improves the technology it is impossible for it be anywhere near native or even usable. Anybody that uses any of these upscaling technologies is lying to everyone, including themselves.

It's not unlikely that Nvidia might be leveraging their gaming expertise to stay ahead while heavily investing in AI. The gaming sector could indeed be a strategic front to keep competitors on their toes, as you suggested.It's true but why not exit gaming and go all in on ai unless their is internal conflict on the board. Someone on the board wants to paint gaming as not profitable or worth the risk or roi. Gamers are caught in the crossfire imo. This might solidify Nvidia going all in on ai from my guesstimate and Blackwell gaming is just a smoke screen to its competition look we are still focused on gaming sike!

Why not go all in on Ai?, I would have to say that any money gained from these chips is a plus, that being said, I am on the front that Nvidia uses all their best silicon on Ai cards, which could explain the defects popping up all over with the 5000 series.

Similar threads

- Replies

- 36

- Views

- 396

- Replies

- 36

- Views

- 573

Latest posts

-

Half-Life 3 may finally be real - new evidence suggests it's nearly complete

- Peter Farkas replied

-

Microsoft is killing off Skype after years of decline

- m3diapers replied

-

Epic Games revokes lifetime bans for some Fortnite cheaters

- m3diapers replied

-

Tecno Spark Slim concept phone is less than 6 millimeters thick

- p51d007 replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.